Permacomputing

In my last post I wrote about the Critical Infrastructure Lab launch event, held in Amsterdam. I attended the two day event and will soon write my report and post it on the Bassetti Foundation website, but I couldn’t wait to write about the most engaging and challenging things I came across, at a workshop led by Ola Bonati and Lucas Engelhardt: the concept and practices of permacomputing.

As you might imagine, the concept is related to the nature practices of permaculture, it encourages a more sustainable approach that not only takes into account energy use and hardware and software lifespans but also promotes the use of already available computational resources.

From the starting point that technology has harmed nature, the concept aims to re-center technology and practice and enter into better relations with the Earth.

Practitioners propose a series of research methods that include living labs (we promote this approach in Responsible Innovation research too), science critique, interdisciplinarity and artistic research, which as many readers will know is very close to my own heart. Fields include Ecosystems and computational conditions of biodiversity, Sustainability and toxicity of computation and Biodigitality and bioelectric energy.The Permacomputing network wiki contains the following principles (as well as going into much more detail of all of the above)Care for life, Create low-power systems that strengthens the biosphere and use the wide-area network sparingly. Minimize the use of artificial energy, fossil fuels and mineral resources. Don’t create systems that obfuscate waste.

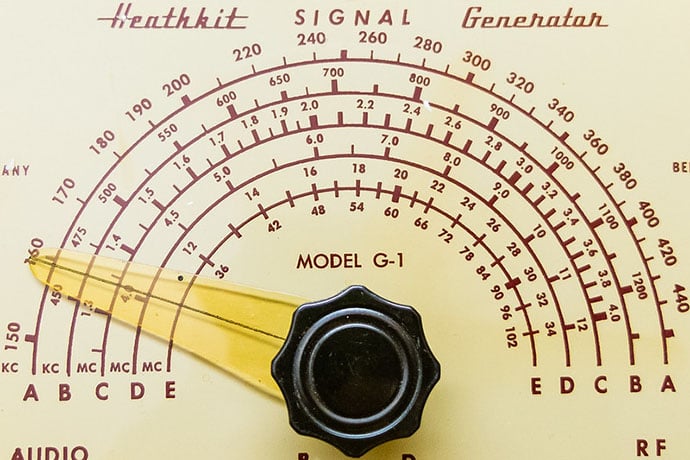

Care for the chips. Production of new computing hardware consumes a lot of energy and resources. Therefore, we need to maximize the lifespans of hardware components – especially microchips, because of their low material recyclability.

Keep it small. Small systems are more likely to have small hardware and energy requirements, as well as high understandability. They are easier to understand, manage, refactor and repurpose.

Hope for the best but prepare for the worst. It is a good practice to keep everything as resilient and collapse-tolerant as possible even if you don’t believe in these scenarios.

Keep it flexible. Flexibility means that a system can be used in a vast array of purposes, including ones it was not primarily designed for. Flexibility complements smallness and simplicity. In an ideal and elegant system, the three factors (smallness, simplicity and flexibility) support each other.

Build on solid ground. It is good to experiment with new ideas, concepts and languages, but depending on them is usually a bad idea. Appreciate mature technologies, clear ideas and well-understood theories when building something that is intended to last.

Amplify awareness. Computers were invented to assist people in their cognitive processes. “Intelligence amplification” was a good goal, but intelligence may also be used narrowly and blindly. It may therefore be a better idea to amplify awareness.

Expose everything. Don’t hide information!

Respond to changes. Computing systems should adapt to the changes in their operating environments (especially in relation to energy and heat). 24/7 availability of all parts of the system should not be required, and neither should a constant operating performance (e.g. networking speed).

Everything has its place. Be part of your local energy/matter circulations, ecosystems and cultures. Cherish locality, avoid centralization. Strengthen the local roots of the technology you use and create.

There is also a page of concepts and ideas that are needed to discuss permacomputing and a library. You can find links to projects, technology assessments and information about courses and workshops, as well as lots of communities to investigate and join, and how to contribute to the wiki.

Why not join the discussion and spread the word?